Transforming Images with ControlNet and Stable Diffusion: A Step Forward in Style Transfer

In this post we’ll compare different image stylization techniques, explore why ControlNet and Stable Diffusion are the winning combination of a super stylizer, that can truly transfer arbitrary styles and generate immersive and high quality results.

ControlNet Samples Gallery screenshot 1

The concept of image stylization

Image stylization is the process to combine the style of one input image with the content of another input image to generate a new image with the style of the first and the content of the second image. The content of an image consists of the objects, the shapes, and the overall structure and geometry of the given image. For example, the content of the image below contains a cat:

On the other hand, the style of an image has to do with the textures, the colors, and the patterns. For example, the image below depicts a painting by Claude Monet (Nymphéas), the style of this image is unique and is related to the artistic style of Monet:

Nymphéas, 1915, Musée Marmottan Monet, Public Domain

In one sense, image stylization is like a computer algorithm simulating the work of an artist, and the creative results have many applications in the field of art, fashion, gaming, entertainments, etc. Following is an example of the cat image stylized with the Monet painting:

A cute cat stylized with Monet’s Nymphéas painting

Popular image stylization techniques

Before the advancement of large text-to-image models and related applications popularized by DALL-E, Midjourney, Stable Diffusion, and alike, smaller Neural Style Transfer (NST) models are trained to do image stylization, which infer the style directly from one or more style images and apply it to the content image. In general they can do a decent but probably not great job at stylizing images. Following are examples of single, multiple, and specialty style transfer.

An example of Neural Style Transfer with a single style

An example of Neural Style Transfer with multiple styles

An example of specialty Neural Style Transfer with a cartoon style

Released and open sourced in 2022, Stable Diffusion is a deep learning, text-to-image model that can generate detailed images conditioned on text descriptions. Image-to-image (img2img for short) is a method to generate new AI images from an input image and text prompt. The output image will follow the color and composition of the input image. Apparently, img2img can be used for image stylization, and it’s more flexible than NST algorithms, because the style is defined by the text prompt that can depict infinitely variable images.

Using Stable Diffusion img2img to stylize images, the resulting images might have the style transferred relatively well, but you have to use a proper style with appropriate style strength, and it’s not easy to find a good match. Keep in mind that a lower style strength will preserve more of the original image, while a higher strength will apply more of the selected style. Take this portrait of a woman as an example,

suppose you want to style it with Renaissance art, using a weaker style strength (say 20%) you’ll get results resembling the portrait but not much Renaissance style, using a stronger style strength (say 80%) you’ll get results in somewhat Renaissance style but very much deviating from the original portrait. In fact, in this case however you adjust the style strength it’s very hard to get a realistic looking result, i.e. a portrait looks like a Renaissance painting.

Portrait of a woman stylized with Renaissance art using img2img, style strength 20%

Portrait of a woman stylized with Renaissance art using img2img, style strength 50%

Portrait of a woman stylized with Renaissance art using img2img, style strength 80%

However, ControlNet offers much better control than img2img. Using the same portrait image, if you stylize it with Renaissance art using ControlNet instead of img2img, you can easily generate results containing a person of similar shape and posture but in Renaissance style, i.e. wearing headdress and clothing of that era in a Renaissance environment.

Portrait of a woman stylized with Renaissance art using ControlNet (Canny), style strength 50%

Portrait of a woman stylized with Renaissance art using ControlNet (sketch), style strength 50%

Portrait of a woman stylized with Renaissance art using ControlNet (depth), style strength 50%

What is ControlNet?

ControlNet is a neural network structure to control diffusion models by adding extra conditions. While Stable Diffusion uses text prompts as the sole conditioning input, ControlNet extends this by adding an extra conditioning input to the model to guide the outcome. One of the most important features of ControlNet is its ability to preserve the structure and content of the input image while applying the desired style or effect smoothly.

ControlNet Samples Gallery screenshot 2

How does it work?

There are many ways to extract the main structure of an image, one classic algorithm is Canny edge detector that identifies outlines of the image. These detected edges are then saved as a control map, and included as an extra conditioning input for the diffusion model, in addition to the text prompt. The process of extracting specific information (in this case, edges) from the input image is referred to as annotation in the research article, or preprocessing in the ControlNet extension. Apart from edge detection, there are other methods of preprocessing images, such as sketch, depth and pose map to help define the main structure of an image. For example, the Openpose detection model can identify human poses, including the locations of hands, legs, and head.

Canny (top left), sketch (top right), depth (bottom left) and pose (bottom right) maps of the input image (portrait of a woman)

Conclusion

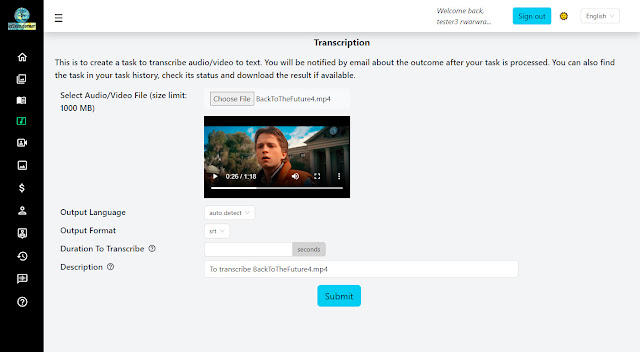

The traditional Neural Style Transfer is simpler and faster to run, can produce more predictable results, yet the quality may not be satisfactory. As a step forward in Style Transfer, ControlNet and Stable Diffusion are the winning combination of a super stylizer, that can truly transfer arbitrary styles and generate immersive and high quality results. You can find various image stylization tools in the aiTransformer app, which was used to generate most of the sample images above. In the app there is also a ControlNet Samples Gallery to help understand how versatile ControlNet is for image stylization. The gallery shows 9 input images, including portraits of a boy, a girl, a man, a woman, a dog, and a cat, as well as 3 scenic landscapes; and 48 different styles to apply, ranging from classic art styles like Renaissance, Impressionism, and Cubism to contemporary art styles like Photorealism, Pop Art, and Fantasy, as well as more modern styles like Cyberpunk, Steampunk, and Midjourney textual inversion styles. Three output variations are included for each input image and every style, resulting in a total of 1296 sample images. Go ahead and explore the different input images and styles, see what you like and don't like. Your comments are welcome!

Comments

Post a Comment